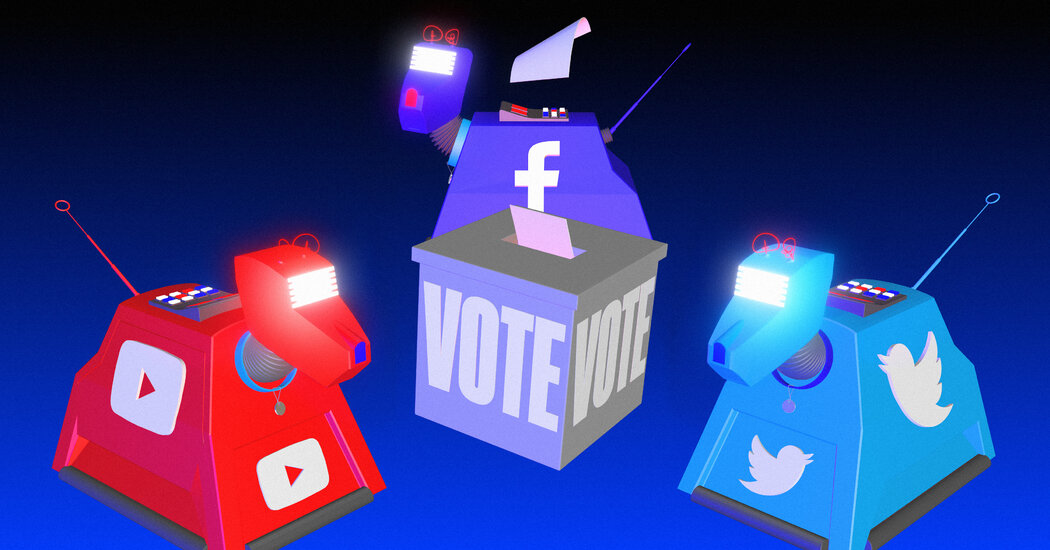

The sites are key conduits for communication and information. Here’s how they plan to handle the challenges facing them before, on and after Tuesday.

By Mike Isaac, Kate Conger and Daisuke Wakabayashi

SAN FRANCISCO — Facebook, YouTube and Twitter were misused by Russians to inflame American voters with divisive messages before the 2016 presidential election. The companies have spent the past four years trying to ensure that this November isn’t a repeat.

They have spent billions of dollars improving their sites’ security, policies and processes. In recent months, with fears rising that violence may break out after the election, the companies have taken numerous steps to clamp down on falsehoods and highlight accurate and verified information.

We asked Facebook, Twitter and YouTube to walk us through what they were, are and will be doing before, on and after Tuesday. Here’s a guide.

Before the election

Since 2016, Facebook has poured billions of dollars into beefing up its security operations to fight misinformation and other harmful content. It now has more than 35,000 people working on this area, the company said.

One team, led by a former National Security Council operative, has searched for “coordinated inauthentic behavior” by accounts that work in concert to spread false information. That team, which delivers regular reports, will be on high alert on Tuesday. Facebook has also worked with government agencies and other tech companies to spot foreign interference.

To demystify its political advertising, Facebook created an ad library so people can see what political ads are being bought and by whom, as well as how much those entities are spending. The company also introduced more steps for people who buy those ads, including a requirement that they live in the United States. To prevent candidates from spreading bad information, Facebook stopped accepting new political ads on Oct. 20.

At the same time, it has tried highlighting accurate information. In June, it rolled out a voter information hub with data on when, how and where to register to vote, and it is promoting the feature atop News Feeds through Tuesday. It also said it would act swiftly against posts that tried to dissuade people from voting, had limited forwarding of messages on its WhatsApp messaging service and had begun working with Reuters on how to handle verified election results.

Facebook has made changes up till the last minute. Last week, it said it had turned off political and social group recommendations and temporarily removed a feature in Instagram’s hashtag pages to slow the spread of misinformation.

Election Day

On Tuesday, an operations center with dozens of employees — what Facebook calls a “war room” — will work to identify efforts to destabilize the election. The team, which will work virtually because of the coronavirus pandemic, has already been in action and is operating smoothly, Facebook said.

Facebook’s app will also look different on Tuesday. To prevent candidates from prematurely and inaccurately declaring victory, the company plans to add a notification at the top of News Feeds letting people know that no winner has been chosen until election results are verified by news outlets like Reuters and The Associated Press.

Source: Read Full Article