Israeli scientists use a $300 living room projector to trick Tesla’s Autopilot feature with fake signs and lane markers displayed on the road

- Researchers at Ben-Gurion University in Israel tested Tesla’s Autopilot

- They used commonly available TV projectors to cast false images on the road

- They tricked the car into reacting to false lane markers, speed limits, and more

- The Tesla’s responses were relatively minor, but still could be exploited

A research team at Ben-Gurion University have created a simple projection system able to trick Tesla’s Autopilot into seeing things that aren’t actually there.

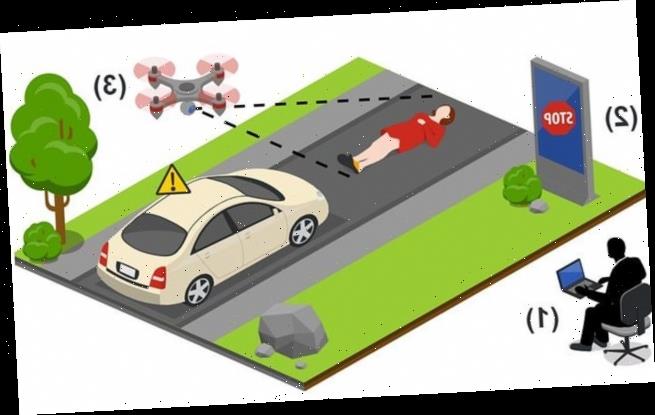

Using commercially available drones and a cheap projector – the kind a person might use to watch television in an apartment of small home – the team projected a series of deceptive images onto the road.

The images included false traffic lines, a false speed limit sign, and an image of Elon Musk himself, projected on the road as if her were an endangered pedestrian.

Scroll down for video

Researchers at Ben Gurion University in Israel successfully tricked Tesla’s Autopilot feature using a drone and an inexpensive living projector with false road signs and images of pedestrians in the road who weren’t actually there

The researchers collectively labeled all these different visual phenomena as ‘phantoms,’ according to a report in ArsTechnica.

While the Tesla they tested reacted to every phantom in some way, most of its responses were fairly mild.

When confronted with the possibility that it might run over a phantom Elon Musk, the Autopilot system just slowed down a little, going from 18mph to 14mph.

In another test, they found projecting a road sign with a false speed limit for just 125 milliseconds was enough for the car’s camera sensors to log the information, though it resulted in no immediate changes.

When false white traffic lines were projected onto the road, indicating a hard left turn on a stretch of straight road, the Tesla didn’t actually make a hard left turn but did drift slightly to the left.

While it was a mild response, during a busier time of day, it could have caused the car to drift into oncoming traffic.

Tesla’s current guidelines for Autopilot asks the human pilot to remain fully attentive and keep their hands on the wheel and ready to take over control of the vehicle at all times.

Many of the phantom projections were often of such a low resolution they easily blended into the background, where it’s likely many passengers would have missed them altogether.

Using a low resolution project that displays images at a resolution of 854×480 and with just 100 lumens, researchers were able to get a Tesla in Autopilot mode to slowdown thinking it was about to collide with a pedestrian

One of the projectors used in the experiments displayed a low resolution of just 854×480 and a brightness of just 100 lumens.

They point to the car’s capacity to be fooled by such subtle environmental cues as a potential flaw that could be exploited by nefarious actors .

Interestingly, they also suggest their phantoms might be used as the basis of a new kind of Turing Test, allowing researchers to differentiate computer operators from human operators based on how they respond to complex environmental stimuli.

Timeline of fatal crashes tied to Tesla Autopilot

January 20, 2016 in China: Gao Yaning, 23, died when the Tesla Model S he was driving slammed into a road sweeper on a highway near Handan, a city about 300 miles south of Beijing. Chinese media reported that Autopilot was engaged.

May 7, 2016 in Williston, Florida: Joshua D. Brown, 40, of Canton, Ohio died when cameras in his Tesla Model S failed to distinguish the white side of a turning tractor-trailer from a brightly lit sky.

The National Transportation and Safety Board found that the truck driver’s failure to yield the right of way and a car driver’s inattention due to overreliance on vehicle automation were the probable cause of the crash.

The NTSB also noted that Tesla Autopilot permitted the car driver to become dangerously disengaged with driving. A DVD player and Harry Potter movies were found in the car.

March 23, 2018 in Mountain View, California: Apple software engineer Walter Huang, 38, died in a crash on U.S. Highway 101 with the Autopilot on his Tesla engaged.

The vehicle accelerated to 71 mph seconds before crashing into a freeway barrier, federal investigators found.

The NTSB, in a preliminary report on the crash, also said that data shows the Model X SUV did not brake or try to steer around the barrier in the three seconds before the crash in Silicon Valley.

March 1, 2019 in Delray, Florida: Jeremy Banner, 50, died when his 2018 Tesla Model 3 slammed into a semi-truck.

NTSB investigators said Banner turned on the autopilot feature about 10 seconds before the crash, and the autopilot did not execute any evasive maneuvers to avoid the crash.

Source: Read Full Article