Facebook has teamed up with police in the US and UK to train its AI using videos of guns so it can better detect violent content

- An AI used by Facebook is being trained to identify violent videos

- The company will train the system on body-cam footage and firearms training

- Facebook hopes that it can better train AI to identify videos before they spread

- It will also broaden its definition of terrorism to combat extremism

Facebook will work with law enforcement organizations to train artificial intelligence to recognize violent videos.

According to Facebook, the efforts will seek to crack down on hate and extremism which has found its way onto the platform and to prevent that toxic content from spreading.

Training will use body-cam footage of firearms training provided by U.S. and U.K. government and law enforcement agencies to develop systems that can automatically detect first-person violent events without also flagging similar footage from movies or video games.

Facebook is also expanding its definition of terrorism to include not just acts of violence intended to achieve a political or ideological aim, but also attempts at violence, especially when aimed at civilians with the intent to coerce and intimidate.

Facebook is using videos of guns to help teach an AI how to identify violent videos uploaded to the platform before they have a chance to spread

‘While our previous definition [of terrorism] focused on acts of violence intended to achieve a political or ideological aim, our new definition more clearly delineates that attempts at violence, particularly when directed toward civilians with the the intent to coerce and intimidate, also qualify,’ wrote the company in a blog post.

Facebook has been working to limit the spread of extremist material on its service, so far with mixed success.

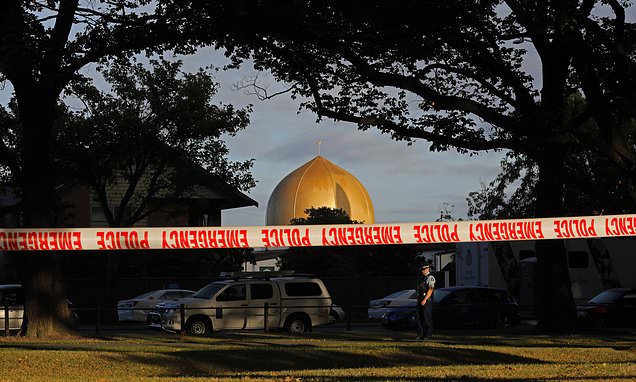

In March, Facebook’s AI systems were unable to detect live-streamed video of a mass shooting at a mosque in Christchurch, New Zealand.

As a result, the video spread across the world and prompted calls to bolster the company’s ability to mitigate toxic content.

Later that same month, the company expanded its definition of prohibited content to include U.S. white nationalist and white separatist material in addition to that from international terrorist groups.

The company says it has banned 200 white supremacist organizations and 26 million pieces of content related to global terrorist groups like ISIS and al Qaeda.

Facebook says an overwhelming 99 percent of that removed content was taken off the platform ‘proactively’ before it had a chance to proliferate.

Facebook has been under greater scrutiny from lawmakers and regulators to rein in aspects of its platform that may affect privacy and spread fake news

Combating Extremism is just one of many areas that Facebook has attempted to rectify over the past two years. In July, the Federal Trade Commission approved a fine against the company for $5 billion over its privacy practices.

More recently, a group of state attorneys general has launched its own antitrust investigation into Facebook which mirrors a broader investigations into big tech companies by Congress and the U.S. Justice Department.

According to Dipayan Ghosh, a former Facebook employee and White House tech policy adviser who is currently a Harvard fellow, More regulation might be needed to deal with the problem of extremist material.

‘Content takedowns will always be highly contentious because of the platforms’ core business model to maximize engagement,’ said Ghosh.

‘And if the companies become too aggressive in their takedowns, then the other side — including propagators of hate speech — will cry out.’

HOW DOES FACEBOOK PLAN TO IMPROVE PRIVACY?

In a March 6 blog post, Facebook CEO Mark Zuckerberg promised to rebuild based on six ‘privacy-focused’ principles:

- Private interactions

- Encryption

- Reducing permanence

- Safety

- Interoperability

- Secure data storage

Zuckerberg promised end-to-end encryption for all of its messaging services, which will be combined in a way that allows users to communicate across WhatsApp, Instagram Direct, and Facebook Messenger.

This he refers to as ‘interoperability.’

He also said moving forward, the firm won’t hold onto messages or stories for ‘longer than necessary’ or ‘longer than people want them.’

This could mean, for example, that users set messages to auto-delete after a month or even a few minutes.

‘Interoperability’ will ensure messages remain encrypted even when jumping from one messaging service, such as WhatsApp, to another, like Instagram, Zuckerberg says.

Facebook also hopes to improve users’ trust in how it stores their data.

Zuckerberg promised the site ‘won’t store sensitive data in countries with weak records on human rights like privacy and freedom of expression in order to protect data from being improperly accessed.’

Source: Read Full Article